In competition with the other major Big Tech AI releases, Amazon Bedrock’s Anthropic released Claude 3.5 Sonnet- “the most powerful AI model to date.”

According to Anthropic, the new model “outperforms other models available today, including OpenAI’s GPT-4o and Google’s Gemini 1.5 Pro, as well as Anthropic’s own previously most capable model, Claude 3 Opus, in areas including expert knowledge, coding, and complex reasoning.”

With more and more AI options available, the actual measures of success for each model will be dependent on users determining which AI model suits their real time purposes the best.

About Claude 3.5 Sonnet:

According to Anthropic, this AI model excels at “complex tasks such as context-sensitive customer support, orchestrating multi-step workflows, streamlining code translations, and creating revenue-generating user-facing applications.”

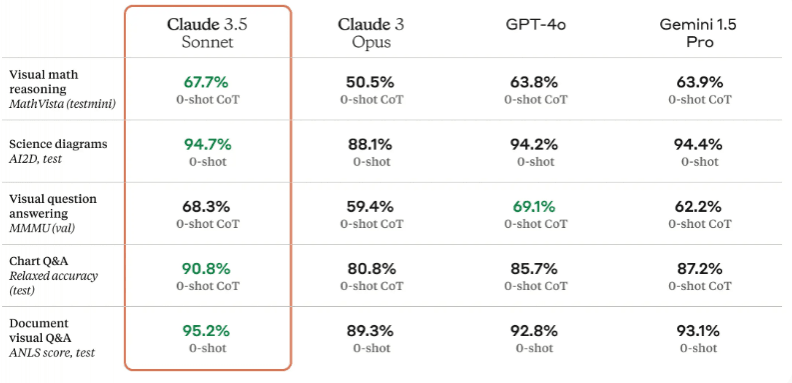

It also is adept at “creative writing, representing a step-change in its ability to understand nuance and humor.” It has advanced capabilities to process “images with state-of-the-art vision, particularly when interpreting charts and graphs—helping to get faster, deeper insights from data.”

Reports have also made note of the AI’s enhanced coding capabilities with “sophisticated reasoning and advanced troubleshooting capabilities, offering best-in-class accuracy.”

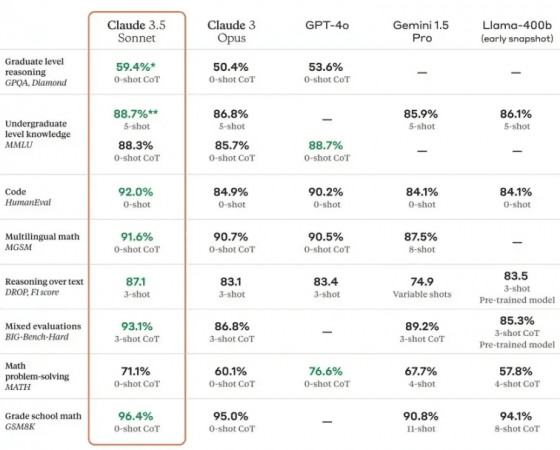

Results from the comparative testing of competing models:

For easy understanding of some technical concepts used above, the measurements referring to “0-shots” and ‘COT- chain of thought’ are based on the number of cycles taken by each AI model to provide an answer, while testing each function.

Functions like ‘reasoning over text’ indicate the level of performance by each AI model when it comes to information processing and math functions using textual paragraphs. ‘Mixed evaluations’ provide measures indicating how complex tasks are carried out with more intricate levels of reasoning and creativity.

The images above are based on Anthropic‘s own testing. Some key performance highlights:

- Claude 3.5 Sonnet outperforms other models, ranking first in its performance for 11 out of the 13 functions measured.

- ChatGPT-4o ties 1st with Claude 3.5 Sonnet on ‘Undergraduate level knowledge’ and ranks 1st on ‘Math problem-solving’ as well as ‘Visual question answering’.It comes in a close second on 4 other measures where information was available.

- Gemini 1.5 Pro takes 2nd place just past GPT-4o in 6 measures of ‘Visual math reasoning’, ‘Science diagrams’, ‘Chart Q& A’ and ‘Document visual Q&A’. It also ranks 2nd in ‘Mixed evaluations’ and ‘Grade school math’. It remains 3rd out of the remaining categories for the AI models reported, where information was available.

There are certain basic commonalities amongst the three AI models: Claude 3.5 Sonnet, GPT-4o and Gemini 1.5 Pro, though they differ in their approach, scope and appearance. For instance, they include improved user experiences through more perceptive AI modelling, features for persons with disabilities and physical impairments, as well as privacy and safety advancements.

It is important to note that reports from other independent sources have used different function measures, with varying results. However, the general trend marks that Claude 3.5 Sonnet serves as a fair competitor to the other AI models. Further comparative testing and real-time application tests can be expected for a more accurate picture, especially significant for determining user preferences.

![Is Claude 3.5 Sonnet better than GPT- 4o and Gemini 1.5 Pro: Anthropic thinks so [details]](https://data1.ibtimes.co.in/en/full/799539/claude-3-5-sonnet.png)